Technology has embedded biases that often lead to societal injustices. In exploring the intricate web of algorithmic bias and social inequality, it becomes evident that AI systems are not neutral entities. To uncover the root of this hidden human rights crisis, we must investigate into the complexities of how AI perpetuates societal inequalities. For a deeper understanding of this issue, read more at Algorithmic Bias and Social Inequality: The Hidden Human …

The Unseen Biases

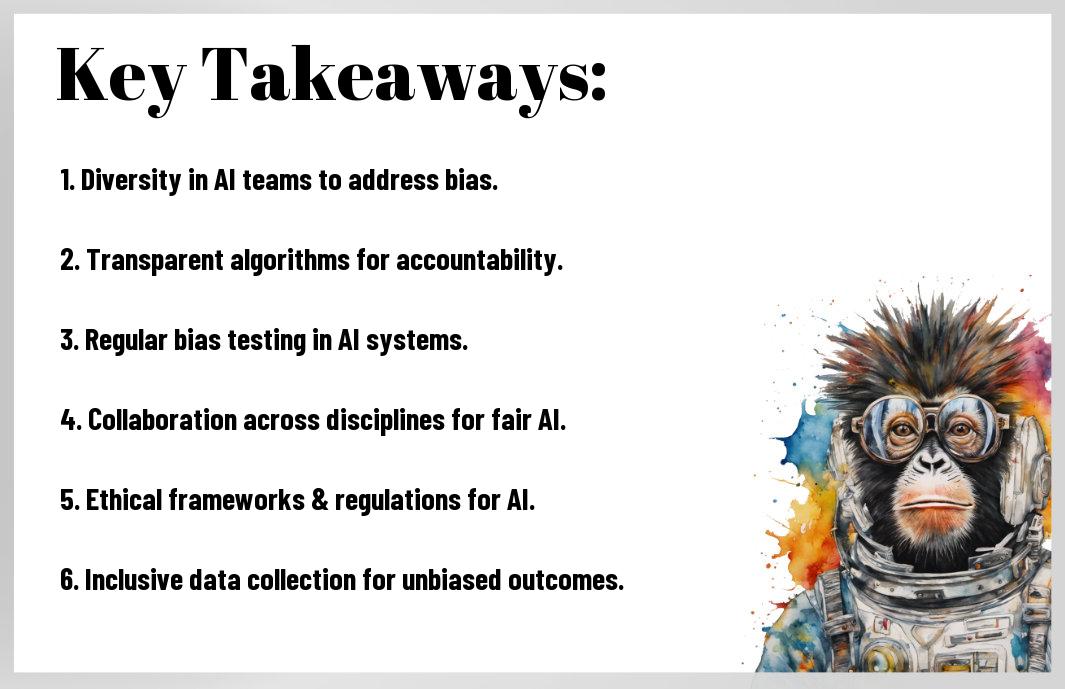

A key challenge in addressing societal inequalities perpetuated by AI lies in uncovering and rectifying the unseen biases present in these systems. As highlighted in How can we address gender bias and inequality in AI?, these biases often mirror and reinforce existing societal inequalities, particularly regarding gender, race, and other marginalized groups.

The Data Problem

Data lies at the heart of AI systems, and the biases present in the data used to train these systems can perpetuate societal inequalities. Without diverse and representative datasets, AI algorithms can inadvertently reinforce discriminatory patterns and exacerbate existing societal biases.

The Human Factor

Factors such as the lack of diversity in AI development teams, unconscious bias among programmers, and inadequate ethical guidelines all contribute to the perpetuation of societal inequalities through AI systems. To address this, we need to prioritize diversity in tech teams, implement rigorous bias detection mechanisms, and establish clear ethical standards for AI development and deployment.

Systemic Inequalities

Racial and Gender Bias in AI Decision-Making

There’s no denying that AI systems often reflect the biases present in the data they are trained on. This perpetuates racial and gender disparities in decision-making processes, leading to unfair outcomes for marginalized groups.

Socio-Economic Disparities in AI Access and Education

For many individuals from disadvantaged socio-economic backgrounds, access to AI technologies and quality education in this field remains limited. This creates a widening gap between the privileged and underprivileged, further cementing societal inequalities.

The lack of resources and opportunities for those in lower socio-economic brackets inhibits their ability to benefit from AI advancements, perpetuating a cycle of inequality. Efforts must be made to provide equal access to AI education and tools to ensure a more inclusive future for all.

Addressing the Problem

Regulatory Oversight and Accountability

For effective change, regulatory oversight and accountability mechanisms must be strengthened to ensure that AI systems are developed and deployed ethically. Government bodies and industry watchdogs should work together to establish clear guidelines and consequences for non-compliance.

Diverse and Inclusive AI Development Teams

The key to creating AI systems that are fair and unbiased lies in fostering diverse and inclusive AI development teams. By bringing together individuals with varying perspectives, backgrounds, and lived experiences, we can challenge assumptions and biases that may be inadvertently embedded in the technology.

Diverse teams can better anticipate and address the potential societal impacts of AI systems, leading to more responsible and equitable solutions. Companies and organizations should prioritize diversity and inclusion in their hiring practices to cultivate a workforce that reflects the diverse communities AI technologies serve.

Final Words

Taking this into account, addressing the societal inequalities perpetuated by AI systems requires a multifaceted approach. It demands increased transparency and accountability from developers and policymakers, as well as diversity and inclusivity in AI teams. Additionally, implementing thorough audits and oversight mechanisms can help mitigate biases in AI algorithms. By actively engaging in these strategies, we can strive towards creating more equitable and just AI systems that benefit all members of society.